“CAN YOU PLAY

A SONG I’D LIKE?”

“WHAT’S THE FASTEST

ROUTE TO THE AIRPORT?”

“IS IT GOING TO

RAIN TODAY?”

People ask these sorts of questions every day—to each other, and, increasingly, to their gadgets.

On computers, phones, tablets, and an ever-growing assortment of smart devices, interactive platforms like Apple’s Siri, Amazon’s Alexa, and Google’s Assistant absorb our queries and respond rapidly with stunning accuracy and personalization.

Artificial intelligence (AI) makes exchanges like these possible. Physicians, researchers, data scientists, and others at Brigham and Women’s Hospital are engaged in a dazzling array of AI projects across many different disciplines.

At the Brigham, precision medicine has to do with better identifying patients with various disorders and providing them with treatment tailored for them. We do that through the use of AI and big data.

– Jeffrey Golden, MD

“Traditionally, AI is the scientific field seeking to enable machines to demonstrate intelligence,” says Adam Landman, MD, chief information officer at the Brigham. “This includes the ability to learn in real time and learn from previous experience. AI includes the fields of natural language processing, machine learning, computer vision, and robotics, among others.”

MEDICINE’S AI REVOLUTION

AI has been evolving alongside technological and mathematical advancements for 70 years. However, three breakthroughs in the past decade paved the way for its proliferation: 1) the availability of extraordinary amounts of electronic data, 2) the advent of massive computational power and storage, and 3) increasingly sophisticated algorithms, the instructions that tell computers how to examine and manipulate data to solve a problem or complete a task.

“Algorithms are getting more sophisticated,” says Keith Dreyer, DO, PhD, chief data science officer for the Departments of Radiology at the Brigham and Massachusetts General Hospital. “At the same time, the volume of data is accelerating and becoming more available in the healthcare practice.”

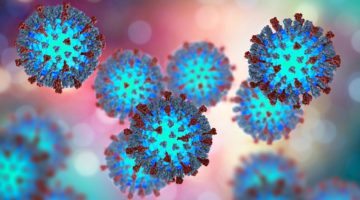

This availability of information is critical, Dreyer says, because machine learning—the most widely practiced form of AI today—can learn how to act with precision only by processing data related to the problem at hand. Dreyer offers an example of how big data helps an artificial neural network—the computer-based brain of a popular machine learning method—distinguish cats from dogs.

DOGS VS. CATS

To learn how to distinguish dogs from cats, an artificial neural network must process thousands of both animals’ images, training itself many times until it can accurately differentiate the pets on its own.

“You show the neural network thousands of dogs and cats—all different kinds, over and over,” Dreyer says. “You keep turning the artificial neurons’ proverbial tuning dials in software hundreds of millions of times to improve the program’s accuracy. Computers work so quickly now that you could do this in a matter of hours, where around five years ago, it took months. Once it’s been trained, you could give the trained artificial neural network brand new pictures of dogs and cats it’s never seen before, and it would accurately identify them.”

He says that today, computers and humans are at the beginning stages of thinking similarly—except the computer uses electronics and the brain uses biology. However, he emphasizes that due to early technology, certain kinds of AI require much more data than humans need to learn.

“Some machine learning methods involve massive data input and continual refinement to identify objects such as dogs and cats, whereas a human might need only a few examples,” Dreyer says.

Now, replace the dog and cat images with thousands of healthy and diseased lung images. They could be fed into a neural network, training it to identify lung cancer nodules.

“We have a tremendous amount of information on every patient—imaging data, lab data, clinical data, pharmacologic data, and much more,” says Jeffrey Golden, MD, chair of the Department of Pathology. “That information lives in different places, a little bit siloed, and is rarely integrated in a sophisticated way. We want to be able to look at all that data simultaneously and interpret it in ways that will help achieve the goal of predicting, preventing, and identifying disease or treating it earlier.”

According to Golden, AI enables the healthcare approach called precision medicine.

“At the Brigham, precision medicine has to do with better identifying patients with various disorders and providing them with treatment tailored for them, either before disease onset or earlier in the disease process, when you have a greater chance at a successful intervention,” says Golden. “We do that through the use of AI and big data.”

PREDICTING AND PREVENTING INFECTION

Among the wide range of AI-driven precision medicine projects underway at the Brigham is a robust effort to determine how to prevent patients from getting sick from the Clostridioides difficile bacterium. The most common hospital-acquired pathogen in the United States, C. difficile can cause symptoms ranging from diarrhea to lethal colon inflammation and recurs in 25 percent of patients.

Georg Gerber, MD, PhD, MPH, FASCP, chief of computational pathology in the Department of Pathology and co-director of the Massachusetts Host-Microbiome Center, leads cutting-edge research efforts to tackle C. difficile. He is one of the few investigators in the world who designs sophisticated AI models to understand how the microbiota in the gut—beneficial microbes that colonize our bodies—may enable or thwart C. difficile infections in patients. With these findings, he and his colleagues will develop new diagnostic tests and treatments.

“Our machine learning tools are determining which microbes and their activities in the gut can prevent C. difficile infection in the first place, or limit recurring infection,” Gerber says. “Using that information, we could then develop diagnostic tests to predict who is susceptible to the infection, and ultimately create personalized therapeutics from living beneficial bacteria to prevent the disease or its recurrence.”

The amount of data to process for this work is immense, especially since the microbiome is an always changing ecosystem of trillions of microbes.

“It’s not only the number of variables we’re measuring but also the complexity,” Gerber says. “We can’t look at the organisms independently; we have to look at how they interact.”

THE AI QUICK REFERENCE GUIDE

Algorithm

“A set of instructions or rules to carry out a task or solve a problem.” —Adam Landman, MD

Artificial intelligence

“Traditionally, AI is the scientific field seeking to enable machines to demonstrate intelligence. This includes the ability to learn in real time and learn from previous experience. AI includes the fields of natural language processing, machine learning, computer vision, and robotics, among others.” —Landman

Artificial neural network

“Inspired by the brain’s neural network and created using computers, artificial neural networks can use software to simulate a neuron, a nerve cell that processes and transmits information. You can then digitally connect these artificial neurons via software or hardware to form the network.” —Keith Dreyer, DO, PhD

Big data

“In medicine, massive amounts of information—about every patient, condition, procedure, and drug—that can be analyzed to diagnose, treat, and predict disease.” —Jeffrey Golden, MD

Machine learning

“A field of AI that allows machines to learn from and make predictions from data using sophisticated computational algorithms.” —Dreyer

Precision medicine

“Understanding everything that encompasses a patient—their clinical and imaging data, how they’ve responded to medicines, how their proteins fold, and much more—and using the information to treat that individual. We’ll build algorithms that allow us to predict what will happen with groups of patients and then drill down to figure out what should be done for individual patients.” —Golden

According to Lynn Bry, MD, PhD, director of the Massachusetts Host-Microbiome Center at Partners HealthCare, Gerber’s algorithms have dramatically cut down the time and effort that would have been required to conduct the research without an assist from AI.

“When you’re trying to think through all possibilities on the microbe side and the host side, you can get lost,” Bry says. “If I had to test all possible combinations of which specific microbes are needed to prevent C. difficile infection, that’d be over a million possibilities for just 20 microbes. It’s not something you’re going to eyeball; it can’t be done in a spreadsheet. Georg’s algorithm gave us a short list of combinations to test.”

With that targeted data in hand, Gerber, Bry, and Jessica Allegretti, MD, MPH, director of clinical trials in the Brigham’s Crohn’s and Colitis Center, were able to identify which microbes in the gut prevent and treat C. difficile in animal models of the infection.

“The set of bacteria we found can cure C. difficile in mice. It’s amazing—mice who are very sick fully recover,” Gerber says. “The next step will be to do clinical trials in people to see how well this works.”

MAKING MEDICAL IMAGING FASTER, BETTER, AND MORE NIMBLE

Among the many other exciting AI projects at the Brigham is the use of machine learning to improve the speed and quality of medical imaging.

While MRI’s excellent resolution enables specialists to view intricate details in the body, it’s also one of the slowest, most expensive imaging options. MRI’s powerful magnet also makes it immobile and anathema to metal objects. On the other hand, ultrasound images are less clear, but the technology is fast, simple, inexpensive, and portable.

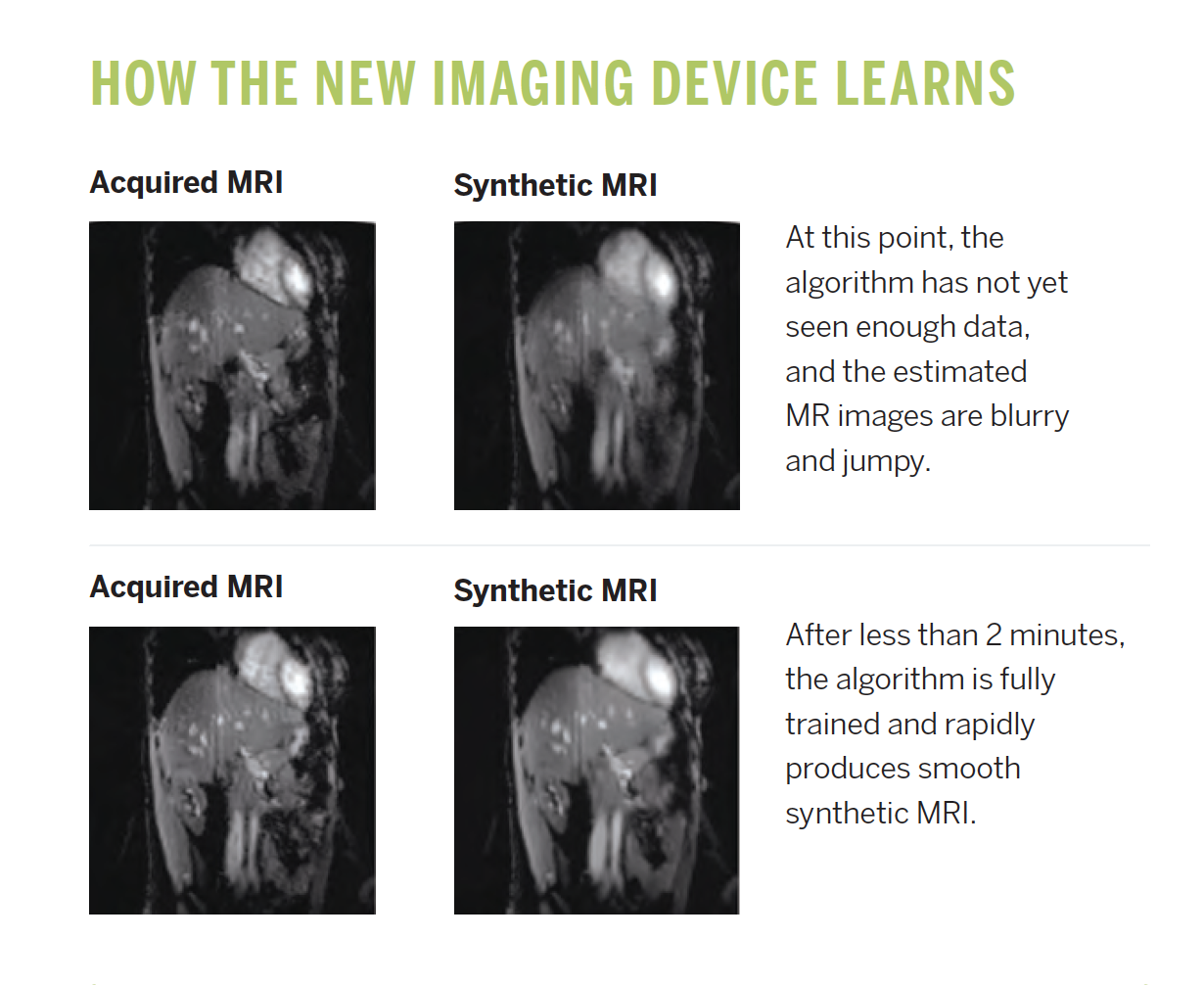

“Using machine learning, we’re combining the MRI’s quality and the ultrasound’s speed into something new that gives us the best of both worlds,” says Bruno Madore, PhD, associate professor of radiology and director of the Brigham’s Advanced Lab for MRI and Acoustics (ALMA). Madore and his colleagues in the ALMA have developed a unique hardware and software system that acquires ultrasound and MRI data simultaneously to track the movement of internal organs as a patient breathes.

For example, while a patient undergoes a traditional MRI scan, a small, stripped-down ultrasound device on the abdomen acquires an unprocessed and imageless signal from the liver hundreds of times per second. At the same time, the ultrasound device acquires MRI images of the liver at a much slower speed.

“Because we’re getting the MRI data and the ultrasound data at the same time, we can use AI to learn correlations between these two different types of signals,” Madore says. “In just a minute or two, the algorithm learns how to convert the incoming ultrasound signals into MRI-looking images, so that the little ultrasound device essentially becomes a surrogate for the MRI scanner.”

The result is a stream of synthetic MRI scans that accurately match the actual one, with additional flexibility and increased frame rate.

Using machine learning, we’re combining the MRI’s quality and the ultrasound’s speed into something new that gives us the best of both worlds.

– Bruno Madore, PhD

He says, “Once it’s trained, we can take the patient out of the MRI scanner and to a different room—such as an operating room—and the little ultrasound device will keep generating MRI-looking images that properly capture breathing motion. We call this scannerless real-time imaging.”

This feat untethers patients and medical professionals from immobile MRI machines, whose powerful magnets require special rooms and preclude the use of nearby metal objects, like surgical tools. ALMA’s new technology could liberate MRI scans, bringing them into operating rooms so surgeons can track their tools’ movements and patients’ bodily function in real time with extreme precision.

“For example, these scans could guide a needle biopsy into the liver so that it reaches the right target, or they could help track the motion of abdominal tumors during breathing so that radiation beams might better target them,” says Madore. “We anticipate testing this hybrid imaging system in clinical applications within the next two years for image-guided therapies.

FREEING UP EXPERTS

Much like the Amazon, Apple, and Google smart devices that make our days easier, AI-assisted healthcare will increase medicine’s precision and efficiency by taking time-consuming tasks off specialists’ plates—allowing them instead to focus more intensively on creating better healthcare solutions for patients.

“AI and machine learning won’t replace specialists,” Madore says. “For example, radiologists will rely on AI-based tools to help them handle the ever-growing amount of information presented to them. As medical imaging devices are becoming faster, better, and more widespread, they create flows of data that are proving increasingly difficult for radiologists to handle and interpret, and AI-based tools will provide much-needed help and relief.”

Dreyer agrees.

“For the last hundreds of thousands of years, the human brain’s evolution has crept along,” he says. “For the last 70 years, computational abilities have moved at a much faster pace. We’re not quite there yet, but at some point, computers will have more computing capability than the human brain. That doesn’t mean cyborgs are going to take over. We need to think about how to include these other intelligent components we can use when we humans need augmented help.”

EVERYDAY AI

Email spam filters learn to recognize and move junk mail to a spam folder.

Credit card companies analyze how a customer typically shops and spends to reduce genuine transactions from being declined.

Google Maps finds the correct address through a system that learned to read street names and addresses from billions of Street View images.

Facebook suggests who to tag in photos through facial recognition software.

Mobile banking apps automatically interpret words and dollar amounts to deposit scanned checks.

Ridesharing services such as Uber and Lyft estimate arrival times for rides and price them in real time, based on supply and demand.

Amazon’s Echo device uses sophisticated speech recognition to answer questions and perform virtual tasks quickly.